ETCB - ETL System

Project Overview

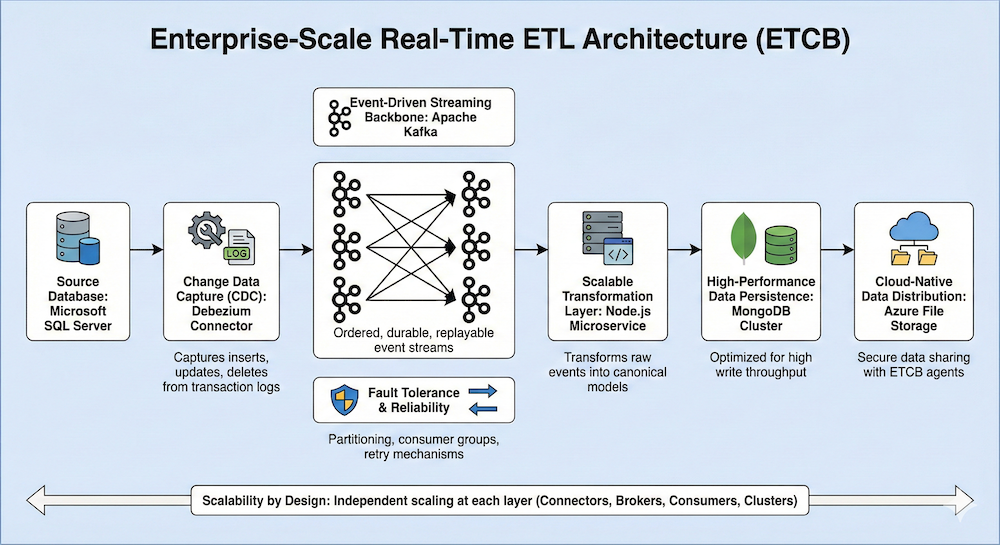

Architected a high-throughput, enterprise-grade ETL and real-time data pipeline for ETCB, a stock market services company.

The solution was designed to handle millions of database changes per second, enabling reliable, near real-time data processing at massive scale. Using Change Data Capture (CDC), the system continuously monitors Microsoft SQL Server for data changes and streams them efficiently through Debezium and Kafka.

A scalable Node.js transformation layer converts streaming events into standardized canonical data models, which are then persisted in MongoDB for downstream processing.

From MongoDB, the data is programmatically distributed across Azure file systems, where ETCB’s agent-based systems securely consume and process the data.

This robust, fault-tolerant ETL architecture ensures data consistency, scalability, and real-time availability, empowering ETCB to deliver high-performance services to its clients with confidence.

Enterprise-Scale Real-Time ETL Architecture (ETCB)

- Change Data Capture (CDC):

Implemented a real-time CDC pipeline using Debezium to continuously monitor Microsoft SQL Server transaction logs and capture every insert, update, and delete with minimal impact on the source database. - Event-Driven Streaming Architecture:

Leveraged Apache Kafka as a distributed streaming backbone to reliably ingest and process millions of data changes per second, ensuring ordered, durable, and replayable event streams. - Scalable Transformation Layer:

Built a horizontally scalable Node.js microservice to transform raw CDC events into standardized canonical data models, enabling consistent data consumption across multiple downstream systems. - High-Performance Data Persistence:

Persisted transformed data into MongoDB, optimized for high write throughput and low-latency access, supporting real-time and batch consumption patterns. - Fault Tolerance & Reliability:

Ensured resilience through Kafka partitioning, consumer groups, offset management, and retry mechanisms—allowing seamless recovery from failures without data loss. - Cloud-Native Data Distribution:

Programmatically exported processed data to Azure File Storage, enabling secure and scalable data sharing with ETCB’s agent-based systems. - Scalability by Design:

Designed the entire pipeline to scale independently at each layer (CDC connectors, Kafka brokers, Node.js consumers, MongoDB clusters), supporting growing data volumes and peak market activity.

Technologies Used

Interested in working together?

Let's discuss how we can build something amazing together.

Contact Me